In our daily lives, we often find that the content we see on streaming media differs greatly from what others see. Even when watching the same video, the top comments often reflect the opinions of the user. This is actually the result of algorithmic bias in big data. Big data labels users based on the length of time they spend on certain pages and then pushes similar content to them again.

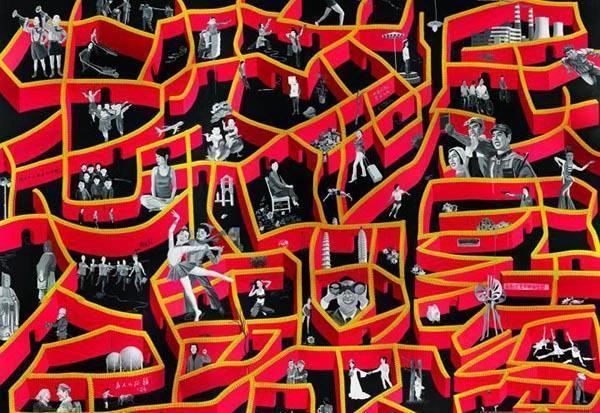

Clearly, such algorithmic bias has significant drawbacks. People tend to only believe what they already believe. For these individuals, it’s like wrapping themselves in labels, unable to see other opinions, thus trapping them in an information cocoon. And this is already a true reflection of the lives of the vast majority of people!

From an economic perspective, the more things people see that they like, the more they are willing to buy or use them. On the other hand, it effectively maintains a peaceful online environment. In the early days of the internet, arguments and insults were difficult to avoid, but now, when a group of like-minded people are viewing the same content, the probability of arguments is much lower.

source:信息茧房_百度百科

I like how you explain algorithmic bias in such a clear and relatable way, especially the idea of people being wrapped in labels, in their own information cocoon. It also connects to the Pariser filter bubble idea, and I think your example of streaming media makes it super easy to understand. Another point I want to highlight is how this affects online interactions and keeps the environment calmer, which really demonstrates that you’re considering both social and economic aspects.